Engineering

Unlike individual crypto researchers who might just run a single chain like Ethereum on their laptop to focus on a few smart contracts, our system has to scale across 100 blockchains, 1,000 protocols, and 100,000 unique smart contracts. We need to stay on top of all of them, and we need to do it fast.

We’re kicking off a tech series to give you a behind-the-scenes look at what it takes to run a reliable and scalable blockchain data pipeline. This is the backbone of everything we do and what powers our customer-facing products. We currently run in-house node infrastructure for over 40 chains and manage a 400TB+ data warehouse.

Stay tuned for more!

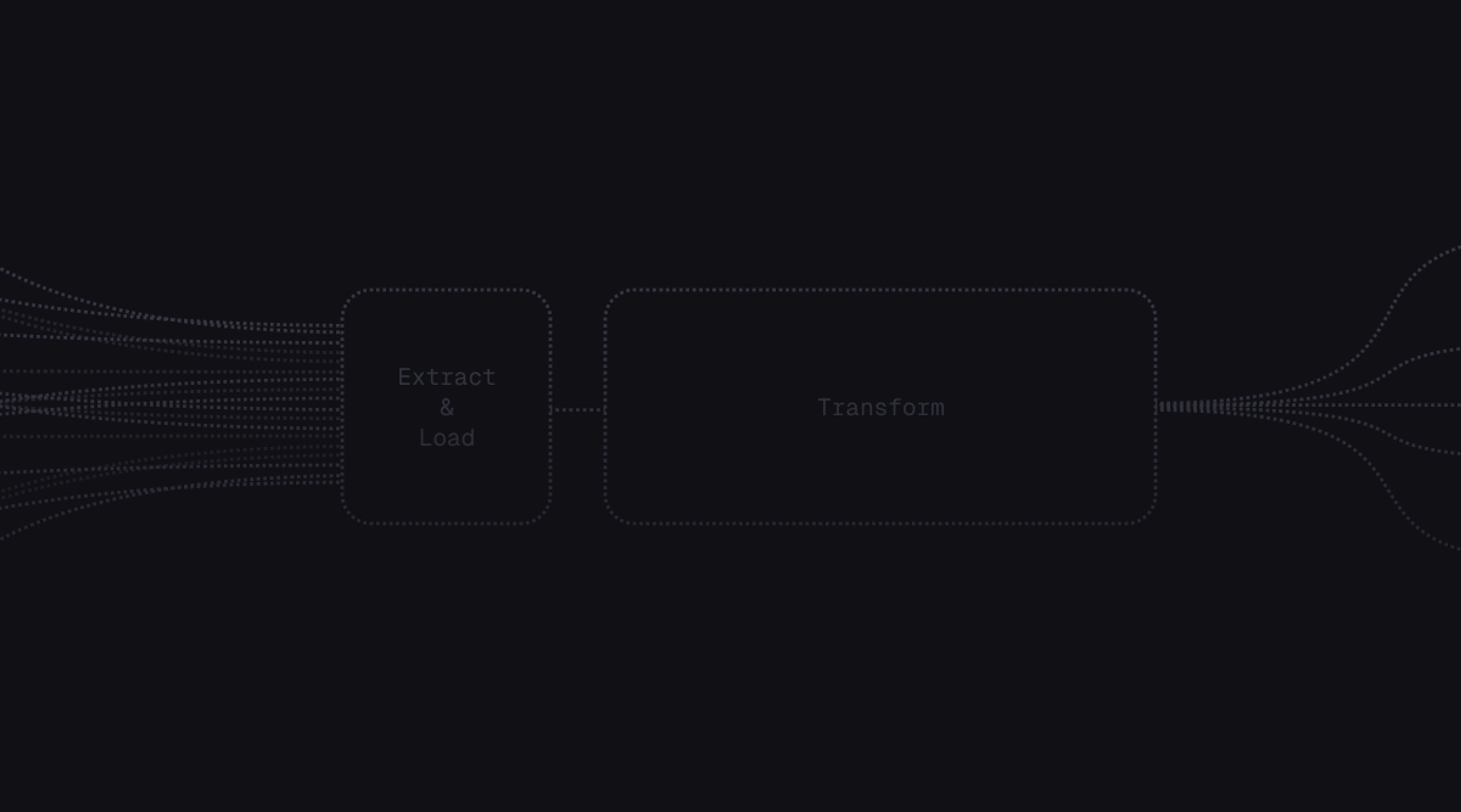

ETL (Extract, Transform, Load) has been a reliable standard for data pipelines since the 1970s. Even today, it remains a popular choice for blockchain indexers and in-house crypto data teams.

We like ETL, and it works well for certain narrow use cases, such as:

- When the business logic is simple and rarely changes, like with block explorers.

- When the data volume is small, such as protocol teams focusing on a single chain or few contracts.

But when you need to scale across multiple chains and protocols, or quickly iterate around changing business logic, it quickly becomes a bottleneck.

So what’s changed since the disco era? Modern cloud-based data warehouses, built to handle petabyte-scale data, have enabled us to switch the order of operations, and use ELT (Extract, Load, Transform).

ETL transforms the data before loading it into the data warehouse. ELT flips this process: data is extracted and loaded in its raw form first, and transformations are applied within the data warehouse, usually with SQL.

Our end-to-end data pipeline follows the same idea:

- Extract: We run our own nodes and pull the data straight from them.

- Load: We load latest and historical data into our data warehouse in its raw form.

- Transform: Using SQL, we turn that raw data into standardized metrics right inside the data warehouse.

- Serve: Our backend then connects to the data warehouse and serves up the data through our API.

Unlike individual crypto researchers who might just run a single chain like Ethereum on their laptop to focus on a few smart contracts, our system has to scale across 100 blockchains, 1,000 protocols, and 100,000 unique smart contracts. We need to stay on top of all of them, and we need to do it fast.

Here’s why our scale makes things hard:

- Inconsistent node reliability: The maturity of node software varies across clients and ecosystems. If data isn't coming through reliably, there's simply no data left to transform. Even Paradigm’s Reth just had its first production release a little over 3 months ago, after two years of hardcore building.

- Constant deployment of new smart contracts: Most decentralized applications have 5-10 contracts on multiple chains they actively develop and manage. We need to track all of them in near real-time.

- Complex and evolving business logic: With all the design freedom that smart contracts offer, the business logic can get complicated quickly. We have to tackle those differences in interpretation right away.

How does ELT help us scale?

Since the raw data is already in our data warehouse, we can use its massive compute power to do all the heavy lifting right there. We get instant access to all the chain’s smart contracts, without the need to go back to the node for more data. Think of it like replicating the node’s write-optimized database into a more analytics-optimized database, the data warehouse.

With ETL, we'd constantly need to return to the node, which isn't optimized for analytics workloads, for each transformation. This process can take hours or even months, depending on the size of the blockchain, the number of contracts, and the complexity of the business logic.

Conclusion

ETL can be a solid choice when you’re dealing with smaller, more stable datasets or when the business logic doesn’t change much. If you’re only focusing on a single chain or a few smart contracts where updates are rare, the upfront transformation in ETL can make things simpler and easier to manage.

For Token Terminal, where we need to track hundreds of chains and thousands of protocols with ever-changing logic, ELT is the clear winner. It gives us the speed and flexibility we need to keep up with new blockchains and smart contract deployments, without sacrificing data accuracy.

The authors of this content, or members, affiliates, or stakeholders of Token Terminal may be participating or are invested in protocols or tokens mentioned herein. The foregoing statement acts as a disclosure of potential conflicts of interest and is not a recommendation to purchase or invest in any token or participate in any protocol. Token Terminal does not recommend any particular course of action in relation to any token or protocol. The content herein is meant purely for educational and informational purposes only, and should not be relied upon as financial, investment, legal, tax or any other professional or other advice. None of the content and information herein is presented to induce or to attempt to induce any reader or other person to buy, sell or hold any token or participate in any protocol or enter into, or offer to enter into, any agreement for or with a view to buying or selling any token or participating in any protocol. Statements made herein (including statements of opinion, if any) are wholly generic and not tailored to take into account the personal needs and unique circumstances of any reader or any other person. Readers are strongly urged to exercise caution and have regard to their own personal needs and circumstances before making any decision to buy or sell any token or participate in any protocol. Observations and views expressed herein may be changed by Token Terminal at any time without notice. Token Terminal accepts no liability whatsoever for any losses or liabilities arising from the use of or reliance on any of this content.

Stay in the loop

Join our mailing list to get the latest insights!

Continue reading

Customer stories: Token Terminal’s Data Partnership with Linea

Through its partnership with Token Terminal, Linea turns transparency into a competitive advantage and continues to build trust with its growing community.

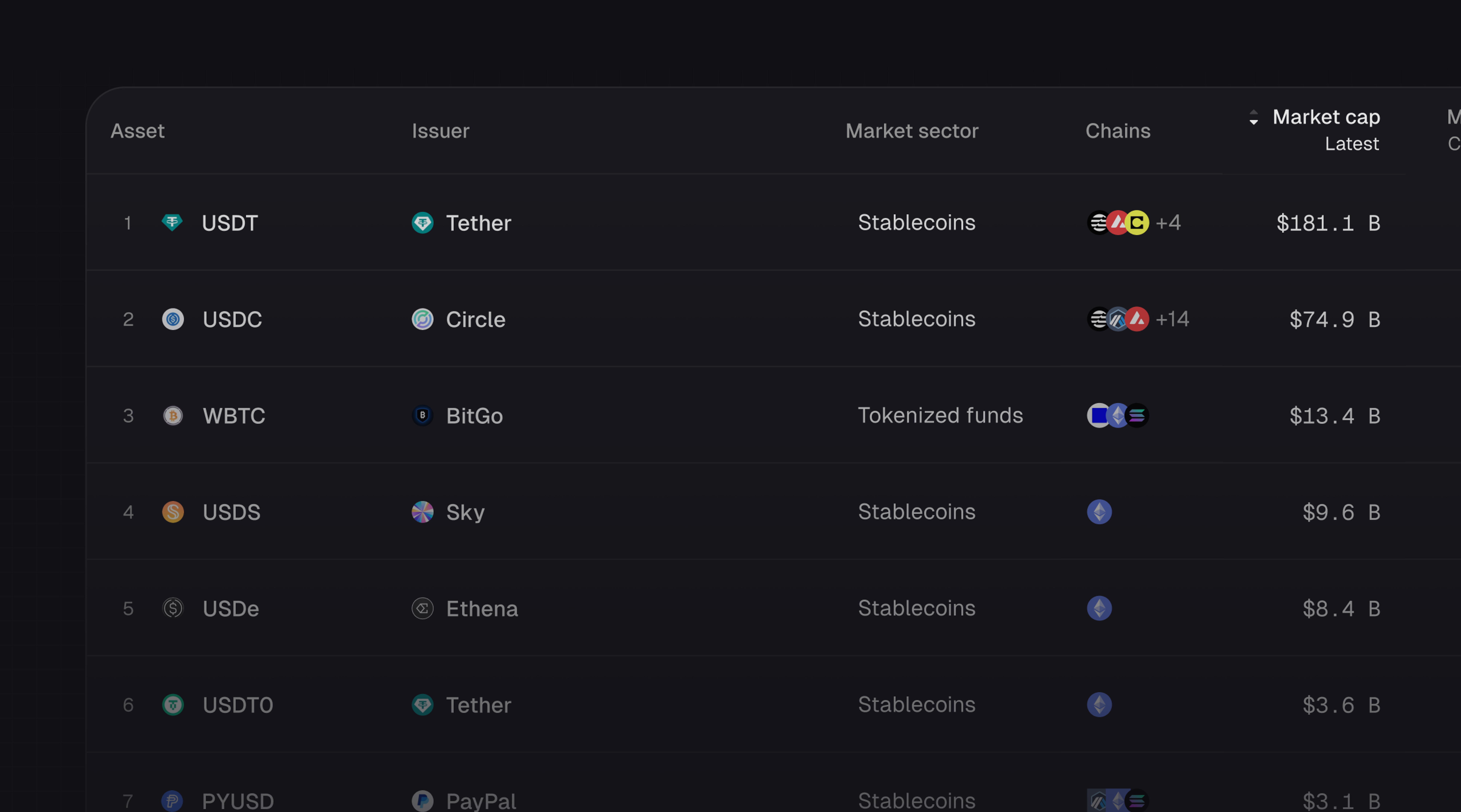

Introducing Tokenized Assets

Token Terminal is expanding its standardized onchain analytics to cover the rapidly growing category of tokenized real-world assets (RWAs) – starting with stablecoins, tokenized funds, and tokenized stocks.

Customer stories: Token Terminal’s Data Partnership with EigenCloud

Through its partnership with Token Terminal, EigenCloud turns transparency into a competitive advantage and continues to build trust with its growing community.