Engineering

Both indexers and Data Lakes play critical roles in managing and retrieving blockchain data, but they do so in different ways. Indexers quickly organize and make data searchable for real-time querying, while Data Lakes store vast amounts of raw data that can be processed and analyzed later.

In our last post, we broke down why ELT is crucial for scaling blockchain data pipelines, especially when you're managing massive amounts of data across multiple chains and protocols.

Now, let’s take that a step further. Should you stick with indexers, or move to a Data Lake approach?

Both indexers and Data Lakes play critical roles in managing and retrieving blockchain data, but they do so in different ways. Indexers quickly organize and make data searchable for real-time querying, while Data Lakes store vast amounts of raw data that can be processed and analyzed later. While indexers are great for immediate analytics, they can become inefficient as your blockchain data grows. In contrast, Data Lakes offer a more scalable, flexible approach to handle large and evolving datasets. A lot of blockchain projects start with indexers for analytics. It works at first, but as your project grows, re-indexing becomes a hassle. Every change to business logic or a metric calculation means you have to reprocess everything. The bigger your data gets, the longer that takes—sometimes hours, and for larger datasets, even days to weeks. Welcome to the crypto data’s cold start problem.

What’s a Data Lake?

A Data Lake stores all raw blockchain data in one place. Instead of re-indexing whenever you need new metrics, the data is already there. You just adjust your SQL queries to get what you need, when you need it.

This approach can save days of downtime on re-indexing, letting you work with the data immediately and keep your analytics flexible.

Why Data Lakes make sense

No more re-indexing. Data Lakes eliminate constant re-indexing. Once the raw data is loaded, you can apply transformations whenever you need using SQL queries. No more hours or days spent waiting for datasets to refresh.

Simpler custom analytics. With traditional indexers, creating custom metrics can require thousands of lines of code. In contrast, Data Lakes allow teams to achieve the same results with just 10-20 lines of SQL. It’s much easier to modify and extend analytics, cutting resource needs by 50% and doubling team efficiency.

Scalable and future-proof infrastructure. Data Lakes are built to scale, handling petabytes of data. They can easily handle your blockchain as it grows in activity from thousands to millions of deployed contracts, with hundreds of teams building on top of it. Now, there’s no bottleneck for these teams and the broader community of analysts to make data-driven decisions and build category-defining winners. It’s like providing Amplitude for your ecosystem.

The industry is shifting to Data Lakes

Polkadot’s DotLake, built by Parity, is a prime example of the shift to Data Lakes. Launched on Google Cloud Platform (GCP), DotLake stores the entire history of over 70 parachains in the Polkadot ecosystem. It optimizes costs and scalability using tools like BigQuery and Cloud Storage, allowing teams to easily query data. In 2024, Parity plans to make the datasets public, offering dashboards and metrics to democratize access to blockchain insights. More info here.

NEAR’s Lake Framework is another forward-thinking Data Lake setup. It uses AWS S3 for data storage and is designed to be highly accessible, even running on small devices with minimal resource consumption. The cost of accessing blockchain data from NEAR’s Lake Framework is incredibly low, at around $20 per month, making it an efficient and scalable solution for anyone looking to work with NEAR’s data. More info here.

By adopting a Data Lake, you’re following the lead of ecosystems like Polkadot and NEAR, which are already leveraging these benefits to scale efficiently and open up data access to their entire communities.

Ready to build a Data Lake?

At Token Terminal, we build Data Lakes for every blockchain we support. It’s how we manage analytics for hundreds of chains and thousands of smart contracts without ever getting bogged down by re-indexing.

If you’re ready to level up from indexers and start scaling with a Data Lake, let’s chat. We can help you set up a flexible, future-proof system that works for your project and saves you hundreds of hours of maintenance and re-indexing.

The authors of this content, or members, affiliates, or stakeholders of Token Terminal may be participating or are invested in protocols or tokens mentioned herein. The foregoing statement acts as a disclosure of potential conflicts of interest and is not a recommendation to purchase or invest in any token or participate in any protocol. Token Terminal does not recommend any particular course of action in relation to any token or protocol. The content herein is meant purely for educational and informational purposes only, and should not be relied upon as financial, investment, legal, tax or any other professional or other advice. None of the content and information herein is presented to induce or to attempt to induce any reader or other person to buy, sell or hold any token or participate in any protocol or enter into, or offer to enter into, any agreement for or with a view to buying or selling any token or participating in any protocol. Statements made herein (including statements of opinion, if any) are wholly generic and not tailored to take into account the personal needs and unique circumstances of any reader or any other person. Readers are strongly urged to exercise caution and have regard to their own personal needs and circumstances before making any decision to buy or sell any token or participate in any protocol. Observations and views expressed herein may be changed by Token Terminal at any time without notice. Token Terminal accepts no liability whatsoever for any losses or liabilities arising from the use of or reliance on any of this content.

Stay in the loop

Join our mailing list to get the latest insights!

Continue reading

Customer stories: Token Terminal’s Data Partnership with Linea

Through its partnership with Token Terminal, Linea turns transparency into a competitive advantage and continues to build trust with its growing community.

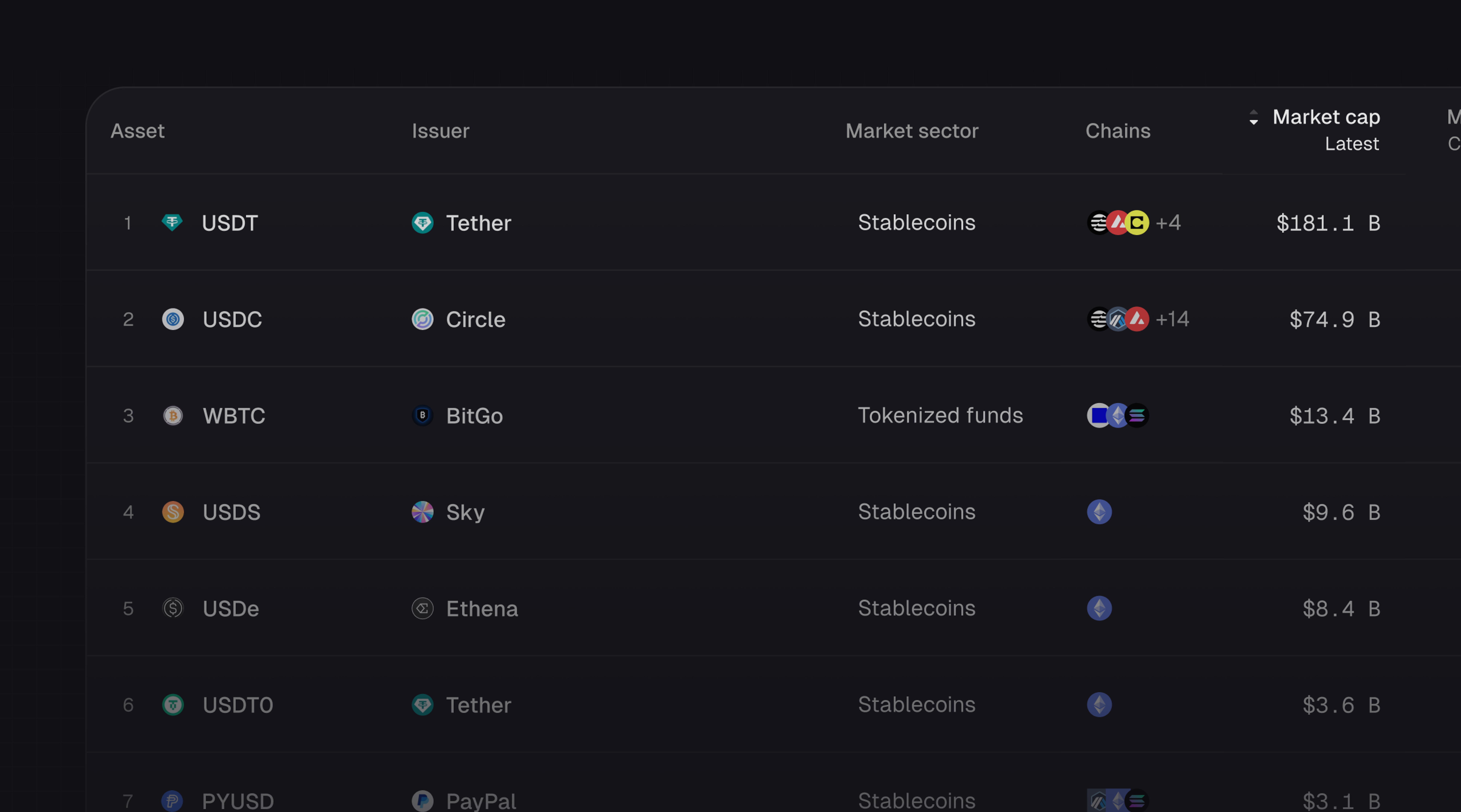

Introducing Tokenized Assets

Token Terminal is expanding its standardized onchain analytics to cover the rapidly growing category of tokenized real-world assets (RWAs) – starting with stablecoins, tokenized funds, and tokenized stocks.

Customer stories: Token Terminal’s Data Partnership with EigenCloud

Through its partnership with Token Terminal, EigenCloud turns transparency into a competitive advantage and continues to build trust with its growing community.